|

Cryptome DVDs are offered by Cryptome. Donate $25 for two DVDs of the Cryptome 12-years collection of 46,000 files from June 1996 to June 2008 (~6.7 GB). Click Paypal or mail check/MO made out to John Young, 251 West 89th Street, New York, NY 10024. The collection includes all files of cryptome.org, jya.com, cartome.org, eyeball-series.org and iraq-kill-maim.org, and 23,000 (updated) pages of counter-intelligence dossiers declassified by the US Army Information and Security Command, dating from 1945 to 1985.The DVDs will be sent anywhere worldwide without extra cost. | |||

8 May 1998

High-performance Computing, National Security Applications,

and

Export Control Policy at the Close of the 20th Century

176

Policy Options for a Control Threshold

As described in Chapter 1, a control threshold is viable only when it satisfies the three basic premises of the export control policy. These are:

l. There exist applications of national security importance whose performance requirements lie above the control threshold;

2. There exist countries of national security concern with the scientific and military wherewithal and interest necessary to pursue these applications should the necessary computational resources become available; and

3. There are features of high-performance computing systems that make it possible to limit access by entities of national security concern to computational performance levels at or above the control threshold, given the resources the government and industry are willing to bring to bear on enforcement.

In this chapter we integrate the results of previous chapters to establish the range within which a control threshold may be viable and offer a number of policy options.

Lower Bound Trends

The central question with respect to the lower bound of a viable range for a control threshold is 'what level of computational performance, across a broad spectrum of applications and workloads, is available to foreign entities of national security concern at various points in time?' A control threshold set below this level is not likely to be effective. As was discussed in Chapter 3, the answer to the question is a function of several factors: the controllability of computing platforms, the availability of computing platforms from foreign sources beyond the control of the U.S. Government and its export control partners, and the scalability of platforms. Since controllability is a function not only of technological and market factors, but also of enforcement resources and commitment, the determination of what is "controllable" is in part a decision that policy-makers will have to make. In addition, policy-makers will also have to make a decision about how scalability is to be handled by the export-licensing process. If scalability is not taken into account explicitly, the computational performance attainable by end-users is likely to be considerably higher than if it is. Following a brief examination of past forecasts of controllability, this section presents a number of policy options and implications with respect to the lower bound.

A retrospective

The lower bound projections made in 1995 were based on the assumption that the existing export licensing process would remain fundamentally unchanged. That is, systems would be evaluated on the basis of the CTP of the precise configuration being exported, rather than on other factors such as the extent to which the system could be easily scaled to a larger configuration. The lower

177

bound charted the performance level that could be reached by end-users by acquiring-- legitimately or not--small configuration symmetrical multiprocessor systems and recombining them or adding boards to create larger configuration SMPs. The projections were made more conservative by incorporating a time lag such that systems did not factor into the projections until two years after they were introduced on the market. Given this regime, did the projections made in 1995 (see Figure 6 in [1] or Figure 3.3 in [2]) come to pass? For the most part, they did. The projections for 1996 and 1997 were based on systems introduced in 1994 and 1995. Developments after 1995 have not change those data points. Did the 1995 study accurately forecast the performance level end-users could attain in 1998? This level would have been based on systems introduced in 1996. Comparing Figure 3.3 of the earlier study with Figure 27 in Chapter 3, we can observe a few differences for 1998. The projected 16,000 Mtops performance of a Cray business systems division (the former CS 6400) did not come to pass. That division was sold to Sun Microsystems in 1996, and much of the CS 6400 technology was incorporated into the Ultra Enterprise 10000. The Ultra Enterprise 10000 was not introduced until 1997. Furthermore, we have chosen not to include it in the lower bound curve, because, unlike other rack-based systems, it is not sold in small configurations and can therefore be "caught" by control thresholds set at the lower bound.

The SGI 1998 prediction (nearly 15,000 Mtops) appears to have been accurate. However, a qualification needs to be made. The system that defined that performance level was a 36processor PowerChallenge (not PowerChallenge Array), introduced in 1996, based on the R10000/200 microprocessor. First, while this system was introduced in 1996, there were problems with the manufacturing of the R10000. Actual processors ran not at 200 MHz, but at 180 or, later, 195 MHz. Second, examination of the TopS00 supercomputer list from November, 1997 shows that the largest configuration sold was a 32-processor version (to the Japanese). There are numerous 24-processor installations, but no 36-processor installations. A conclusion that can be drawn about this (and, indeed, about all bus-based SMP platforms) is that applications have had difficulty in using the last few processors of a configuration effectively. Customers often do not feel that the additional cost of the full configuration is justified. While the nearly 15,000 Mtops level was theoretically attainable, it is unlikely that many users would actually try to install and use a full configuration.

Is the two-year time-lag justified? We believe so. The rationale for that time-lag was that two years would be needed for a secondary market to develop for the systems, usually rack-based, that defined that curve. The current study plots deskside systems using a one-year lag. A review of vendors of refurbished systems in early 1998 supports the prediction that by the end of 1997 or earlier 1998 systems with a full-configuration performance above 7,000 Mtops would be available on the secondary markets. For example, refurbished DEC AlphaServer 8400 5/350 (CTP 7,639 Mtops, introduced in late 1996) and Sun UltraEnterprise 4000/250, a high-end deskside system, (CTP 7,062 Mtops, introduced in early 1997) are available on the secondary market. Refurbished systems vendors will configure and test the system before shipping, but usually perform no on-site services, except on special request and at additional cost. Year-old deskside systems are widely available on the secondary market. For example, AlphaServer 4100 5/466 (CTP 4,033, introduced in early 1997) is widely available from brokers not only in the United

178

States, but also in the Netherlands, Austria, and other countries. Likewise, individual boards are widely available from such sources. Systems introduced less than one year ago are largely absent from the secondary market. While the scalability of systems has made it possible for end-users to reach a performance level of 7,000 Mtops by legitimately acquiring configurations of lower performance, the presence on the secondary market of systems scalable to this level means that this performance can be reached even more easily without the knowledge of the U.S. Government, the HPC manufacturers, or affiliated organizations.

The Lower Bound Towards the Close of the Century

The course of the lower bound through the end of the century will depend on the decisions policy-makers make regarding:

1. whether to continue to use configuration performance or end-user attainable performance as the basis for licensing decisions. If the latter,

2. what constitute controllable categories of computing platforms for the amount of resources government and industry are willing and able to bring to bear on monitoring and enforcement.

If the export licensing process continues to use configuration performance as the basis for a licensing decision, then the lower bound of controllable performance will rise very quickly. Foreign entities will be able to purchase small configurations legitimately, and, in the field, add additional CPUs, memory, and I/O capability on their own to reach much higher levels of performance than they originally acquired. The lower bound of controllability is defined by the extent to which users by themselves can acquire and scale systems with small minimum configurations, such as rack or, in some cases, multi-rack systems. Using the two-year lag described earlier, the lower bound is likely to increase to over 23,000 Mtops in 1999 and close to 30,000 Mtops in the year 2000, given a continuation of current licensing practices.

If licensing practices are changed to consider end-user attainable performance rather than configuration performance in licensing decisions, additional options become available to policymakers. Rather than treating all configurations at a given CTP level as equivalent, an approach based on end-user attainable performance discriminates systems that can be easily scaled to higher performance levels from those that cannot. For example, four processors in a chassis that can accommodate eight processors might be treated differently from four processors in a chassis that can accommodate sixteen processors. Control measures are based directly on that which concerns policy-makers: the performance an end-user can attain. This concept is discussed at length in Chapter 3. A regime based on end-user attainable performance provides policy-makers with a broader range of policy options because of two characteristics of the technologies and the markets: (a) systems with higher end-user attainable performance can be distinguished and treated differently from systems with lower end-user attainable performance, and (b) systems with higher end-user attainable performance are more controllable than those with lower end-user attainable performance. End-user attainable performance correlates with a number of factors that affect the controllability of computing platforms, including the installed base, price, size, and dependence on vendor support. More powerful systems tend to be sold in smaller numbers, be

179

more expensive, have larger configurations. and require greater amounts of vendor support for installation and maintenance Table 34, reproduced from Chapter 3, illustrates some of these qualities for seven categories of computing systems available in the fourth quarter of 1997 Systems based on non-commodity processors, such as vector-pipelined processors, have end-user attainable performance equivalent to the configuration performance, since they are not upgradeable by end-users without vendor support.

| Type | Units installed | Price | End-User Attainable performance |

| Multi-Rack HPC systems | 100s | $750K-10s of millions | 20K+ Mtops |

| High-end rack servers | 1,000s | $85K-1 million | 7K-20K Mtops |

| High-end deskside servers | 1,000s | $90-600K | 7K-11K Mtops |

| Mid-range deskside servers | 10,000s | $30-250K | 800-4600 Mtops |

| UNIX/RISC workstations | 100,000s | $10-25K | 300-2000 Mtops |

| Windows NT/Intel servers | 100,000s | $3-25K | 200-800 Mtops |

| Laptops, uni-processor PCs | 10s of millions | $1-5K | 200-350 Mtops |

Table 34 Categories of computing platforms (4Q 1997)

Under a system based on configuration performance, a dual-processor workstation, a dual-processor mid-range deskside system, and a dual-processor high-end rack server are indistinguishable, even though these platforms have rather different controllability factors. The use of end-user attainable performance makes it possible to distinguish between them and, more generally, distinguish platforms that are controllable from those that are not. Which of these categories are controllable and which are not? Policy-makers must answer this question since it is in large part a function of the resources government and industry are willing to bring to bear on enforcement.

Table 35 shows four possible decision options and the corresponding lower bound performance levels (projected). As described earlier, the figures incorporate lag times of 2 years when rack-based systems and some high-end deskside systems are considered, and lag times of one year for smaller systems. Lower bound figures for 2001 and possibly the end of 2000 are likely to increase sharply as markets mature for servers based on processors like Intel's Merced.

180

Lower bound |

|||

| Decision | 1998 | 1999 | 2000 |

| Option 1. Continue to use configuration performance as basis for licensing decisions |

14,800 | 23,500 | 29,400 |

| Option 2. Use end-user attainable performance, and consider high-end rack-based systems controllable but high end deskside systems uncontrollable |

7,100 | 11,300 | 13,500 |

| Option 3. Use end-user attainable performance, and consider high end deskside systems controllable, but midrange deskside systems uncontrollable |

4,600 | 5,300 | 6,500 |

| Option 4. Use end-user attainable performance, and consider deskside midrange systems controllable, but workstations, PC servers, and PCs uncontrollable. |

2,500 | 3,600 | 4,300 |

Table 35 Trends in Lower Bound of Controllability (Mtops)

Option 4 requires a great deal more export licensing and enforcement effort than option 3, which requires a great deal more effort than option 2, etc. In general, the more conservative the choice for the lower bound of controllability, the greater the resources and effort needed to support that decision.

How do these lower bound figures compare with the control thresholds established by the administration in 1995? End-user attainable performance did not play a role then in export licensing considerations. However, the numbers chosen in 1995 are not inconsistent with those shown above. The 7,000 Mtops threshold corresponds to the performance of rack-based systems introduced in 1995. The 2,000 Mtops threshold is slightly below the performance of mid-range desksIde systems introduced in the same year. In short, these numbers lay along the same trend lines shown for later years under Option 1 and Option 2 in Table 35.

Upper Bound Trends

While a control threshold should be set so that it lies above a lower bound of controllability (determined by policy decisions outlined above), it should at the same time lie low enough to protect computationally intensive applications of national security importance. Chapter 4 establishes that there exist applications of national security importance requiring computational performance above any lower bound figure shown in Table 35. The number of such applications is likely to grow dramatically in the coming years as increasing numbers of practitioners gain access to very powerful systems. There is no shortage of computationally demanding problems that will consume virtually any number of CPU cycles that become available. The constant desire to be able to solve problems using larger and higher resolution grids, to include more variables and higher fidelity models, use shorter time-steps, and reduce turnaround time are only

181

some of the factors driving the demand for more powerful systems. Solutions to coupled or multidimensional problems have astronomical computational requirements. These kinds of problems involve finding integrated solutions to a variety of very different problems concurrently. For example, in simulating the effects of explosions and post-explosion phenomena (e.g. bubbles) on submarines, the shock physics models need to be integrated with structures models. In aircraft design, the flow of air across the aircraft (a CFD problem) affects, and is in turn affected by the shape of the aircraft, which may change under the stress (a CSM problem). Often the phenomena being integrated have very different time scales and grids. As computational models grow in their fidelity to the natural world phenomenon, computational methods have begun to dominate empirical ones. Testing has always been and will continue to be a crucial part of systems development, but computational methods have enables practitioners to achieve a given level of understanding with fewer and better designed tests. Reducing the turnaround time for a given simulation through the use of greater computational resources is critical to shortening the design process. The details of these and other trends are discussed in Chapter 4. The principal conclusion, however, is that the first basic premise--that computationally demanding applications exist--is easily satisfied and will continue to be satisfied for the foreseeable future. The third basic premise, that there are applications requiring computational resources that are controllable is also satisfied, for the present.

The methodology used for this report and its predecessor requires the establishment of an "upper bound" for the control threshold that lies at or above the lower bound, but below the performance of key applications of national security concern, or clusters of such applications. The answer to the question, 'what is the upper bound of a range of viable control thresholds?' is therefore a function both of the kinds and quantities of applications with requirements at various performance levels, and the lower bound resulting from policy decisions outlined above.

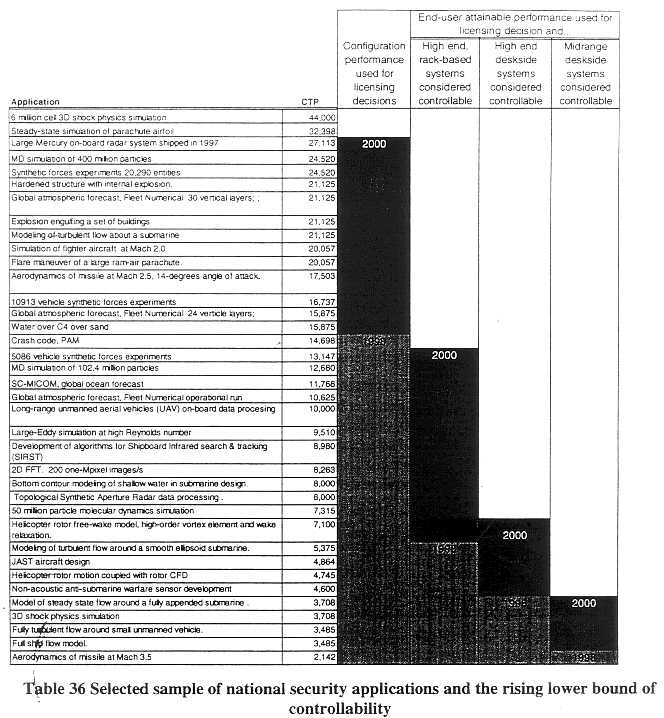

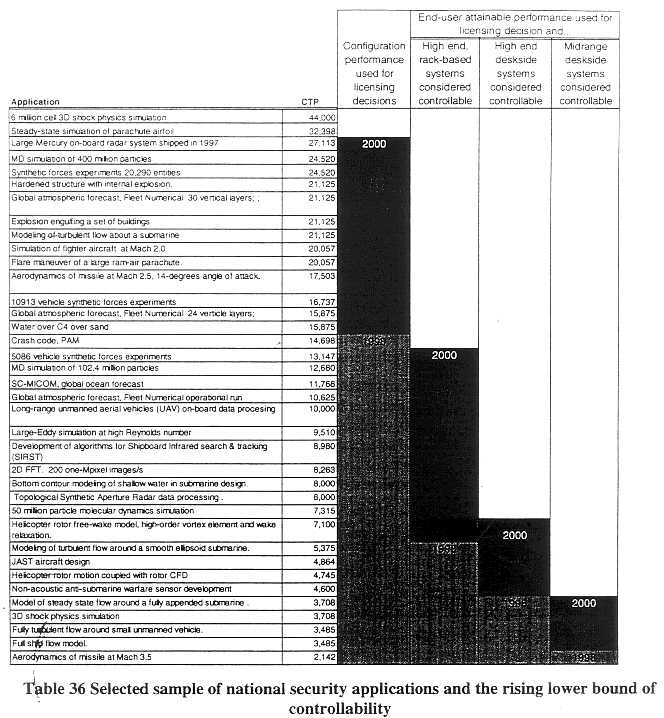

A major portion of this study has been devoted to identifying the kinds of application instances being solved at various levels of computational performance. These are listed in Appendix A, sorted by the CTP of the configuration used. Table 36 shows the rising trend lines for the years 1998-2000 of the lower-bound of controllability under the three options presented in Table 35. The tables show a sampling of applications that may fall below the lower bound of controllability in each of the three years, under each policy option.

Table 36 shows only a subset of the applications listed in Appendix A--those that in the authors estimation are among the more significant. It is, however, the responsibility of the national security community to decide which applications are, in fact, the most important to protect. The identification of individual, particularly significant applications can be one factor helping to determine an upper bound for a control threshold.

182

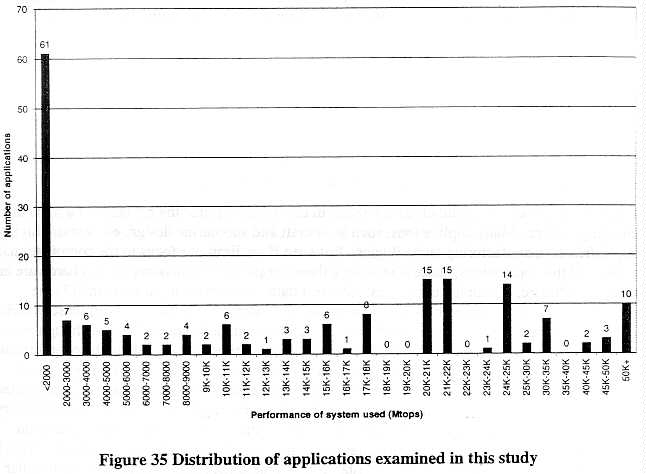

Another factor that may be taken into account in determining an upper bound is the relative density of applications of national security importance at various performance levels. The basic reality is that applications can be found across the entire spectrum of CTP levels. Any level of computing power can be useful, if a practitioner has the appropriate knowledge, codes, and input and verification (test) data. Nevertheless, are there performance levels at which one finds relatively larger numbers of applications than at others? Not surprisingly, these tend to be found

183

around the performance levels of "workhorse" computing systems. Figure 35 shows the distribution of the CTP of configurations used for applications examined in this study and listed in Appendix A.

Those of particular interest lying above some of the lower bounds discussed above are:

1. 4000-6000 Mtops. Some key applications in this range include JAST aircraft design, non-acoustic anti-submarine warfare sensor development, and advanced synthetic aperture radar computation. The number of applications at this performance range is likely to grow rapidly since the number of systems in this range is growing quickly.

2. 8000-9000 Mtops. Applications here include bottom contour modeling of shallow water in submarine design, some synthetic aperture radar applications, and algorithm development for shipboard infrared search and track.

3. 10-12,000 Mtops. Here we find a number of important weather-related applications.

4. 15,500-17,500 Mtops. In this range, we find substantial fluid dynamics applications able to model the turbulence around aircraft under extreme conditions.

184

5. 20-22,000 Mtops. This large cluster includes many applications run on full-configuration Cray C90s, including weather forecasting, impact of (nuclear) blasts on underground structures, and advanced aircraft design.

While these clusterings may be residual effects of the kinds of computing platforms employed by US practitioners, they should be kept in mind by policy-makers as they decide where to set control thresholds for Tier 2 and Tier 3 countries.

Countries of National Security Concern

The discussion above has established that it is possible today to set a control threshold such that the first and third basic premises of the export control policy are satisfied. Does the second basic premise hold as well? Are there countries of national security concern with the scientific and military wherewithal to purse the applications discussed in this study?

Pursuing nearly all the applications discussed in this study requires the presence of a number of enabling factors. Many applications, such as aircraft and submarine design, etc. require highly sophisticated manufacturing technologies. But even if we limit our focus to the computational aspects of the applications, success rests on a three-legged stool consisting of: (1) hardware and systems software, (2) application codes, valid test data, and correct input data, and (3) the expertise necessary to know how to use the codes and interpret results. If any of these is lacking, items (2) and (3) in particular, the application cannot be pursued successfully. Although much of the theoretical basis for the applications discussed in this report is available in the open literature, building and using large applications codes is a very difficult exercise. If a country lacks an established program in an application domain, the acquisition of a high-performance computer will not be sufficient to jump-start such a program. A promising way to develop true capability in one of the application domains is to start with small problems using small codes running on individual workstations and gradually migrate to larger problems, codes, and machines. This is a long-term process. If a country has not established some track record of pursuing a particular application over a multi-year period and given evidence of an ability to develop and effectively use the associated codes and data, then a high performance computing system by itself will be of little or no value and little threat to U.S. national security interests.

Which countries have demonstrated the expertise necessary to use high-performance computers, and for which applications? One unquestionable example is nuclear weapons development and stockpile stewardship. Russian scientists and, to a lesser extent, the Chinese, have demonstrated that they have the ability to use high performance computing resources effectively to pursue these applications. However, the level of computational capability needed to develop the equivalent of today's U.S. stockpile, when nuclear test data is available, is at or below the performance of many workstations. Nevertheless, the U.S. Government has made it policy that stockpile stewardship, requiring much higher levels of capability, is an application of concern for U.S. national security interests.

Weather forecasting is another application in which a variety of countries have demonstrated long-term expertise. Furthermore, the need for high levels of computing power is even more

185

critical here than in the nuclear weapons development, in large part because of the large volumes of data and strict timing limitations needed.

In contrast, many Tier 2 and Tier 3 countries have little or no experience in a host of national security applications. For example, there is little danger of most of these countries usefully employing HPC to develop submarines, advanced aircraft, composite materials, or a variety of other devices.

A critical question, which we have been unable to pursue satisfactorily in this study, is which countries are able to productively use HPC to pursue which applications? We have requested such information from the U.S. national security community, but have received few answers. It does not appear that the U.S. government is effectively gathering such intelligence in a systematic fashion. More specifically, the U.S. government does not appear to have as good an understanding of individual end use organizations of concern as is needed by the export control regime.

In contrast to nearly every other country in the world, the United States has a rich and deep base of experience, code, and data-not to mention hardware and systems software-that makes it uniquely positioned to pursue a very broad spectrum of computationally demanding applications. The U.S. community has perhaps benefited more than any other nation's from the dramatic growth of HPC capabilities and markets. The breadth and depth of U.S. capability in HPC applications is a national strategic advantage that should continue to be cultivated.

Export Control at the Turn of the Century

This study has concluded that the export control regime can remain viable through the end of the century and offers policy makes a number of alternatives for establishing thresholds and licensing practices that balance national security interests and the realities of HPC technologies and markets. Some of these options lead to a more precise policy that can offer policy makers a broader range of viable control thresholds than in the past. At the same time, there are several important trends in applications, technologies, and markets that will affect not only the range within which thresholds may be viable, but also how successful the policy is in achieving its objectives. These trends are those that affect the lower bound of controllability, and the application of HPC technologies, particularly those of a less controllable nature, to problems of national security importance.

Trends affecting lower bound of controllability

Trends impacting the lower bound of controllability were discussed at length in Chapters 2 and 3. Those having the strongest impact on future policies include:

186

Trends in the highest-end systems

Improvements in microprocessors will drive the performance of uncontrollable platforms sharply upward. Because the highest-end systems will built using these same microprocessors, however, they will ride the same performance curves. While today several million dollars must be spent to acquire a 50,000 Mtops computer, in a few years the same amount of money may acquire a system 10-20 times more powerful. There will continue to be low-end and high-end configurations, and a sizable performance gap between the two.

Will the lower bound of performance controllability ever rise to engulf all systems available to practitioners? If the number of systems whose performance is above the lower bound of performance controllability is zero, then no viable control threshold can be drawn without violating one of the basic premises of the export control policy. For the foreseeable future, the answer in the strictest sense will be 'no', as long as entities such as the US government or commercial organizations are willing to fund, or participate in, leading-edge systems

187

development. To say that the ASCI Red system at Sandia is 'just a collection of PCs' is incorrect. A great deal of specialized expertise. software, and, to a lesser degree, hardware is involved in the development of this kind of system. Given the highly dynamic, competitive, and innovative environment of the HPC industry, it is difficult to imagine that a well-funded group of vendor and practitioner experts would not be able to create a system that offered some level of capability not available to a practitioner in another country.

Computing systems with performance of one, ten, or one hundred Tflops, such as those created under the ASCI program, will be continue to be available in very small numbers (at very high cost). The level of performance they provide will continue to be controllable. But what about the performance offered by systems installed in tens or hundreds of units? Will practitioners throughout the world be able to acquire or construct systems with a performance comparable to all but a few dozen of the worlds most powerful systems? The answer is very dependent on progress vendors make in the area of scalability and manageability.

Trends in applications of national security importance

From the perspective of the export control regime, the most significant observation about HPC applications is that there will almost certainly always be applications of national security important that will demand more computational resources than are available. This fact reflects a great constant which has been true for decades.

The most significant change since the early 1990s has been the extent to which parallel platforms and applications have entered the mainstream of scientific and commercial, research and production activities. This shift has been fueled by a number of contributing factors:

While some applications remain wedded to the parallel vector-pipeline systems, these are becoming the exception rather than the rule. The significance for the export control regime is that no longer are high-end systems required for developing applications that can run on high-end systems. In past decades, foreign practitioners were unable to gain the expertise necessary to use a Cray vector-pipelined system because they lacked access to this hardware. Developing

188

applications to run on a Cray would be a pointless exercise. Today, however, MPI runs on all parallel platforms from clusters of workstations to the largest integrated MPP. Developing applications to run on workstation clusters provides a great deal of experience and code that can be ported to larger platforms as they become available. The lack of hardware may at present be a barrier to achieving levels of performance, but it is no longer a great barrier to acquiring a good deal of the expertise needed to use large systems.

Future effectiveness of the export control policy

While the export control policy remains viable a present, in the future "leakage" of the policy is likely to become much more common than it is today. The probability will increase that individual restricted end-user organizations will be successful in acquiring or constructing a computing system to satisfy a particular application need. This leakage will come about as a result of many of the trends discussed above.

First, if policy-makers do decide that systems with installed bases in the thousands are controllable, it is almost inevitable that individual units will find their way to restricted destinations. This should not necessarily be viewed as a failure of the regime or the associated governmental and industry participants. Rather, it is a characteristic of today's technologies and international patterns of trade.

Second, industry is working intensively towards the goal of seamless scalability, enhanced systems management, single-system image, and high efficiency across a broad range of performance levels. Systems with these qualities make it possible for users to develop and test software on small configurations yet run it on large configurations. An inability to gain access to a large configuration may limit users' ability to solve certain kinds of problems, but will not usually inhibit their ability to develop the necessary software or expertise.

Third, although clustered systems are likely to remain less capable than the integrated vendor-provided systems over the next few years, they are improving both in the quality of the interconnects and the supporting software. Foreign users are able to cluster desktop or deskside systems into configurations that perform useful work on some, perhaps many, applications. The control'regime will not be able to prevent the construction of such systems. However, until clustered systems become comparable to more tightly integrated, vendor supplied and supported systems in every sense--systems management, I/O capability, software environment, and breadth of applications, as well as raw or even sustained performance--some advantage may be gained by restricting the export of the latter. How much benefit is a question that should be the subject of on-going discussion.

Nevertheless, even an imperfect export control regime offers a number of benefits to U.S. national security interests.

First, licensing requirements force vendors and government to pay close attention to who the end users are and what kinds of applications they are pursuing. Vigilance is increased, and questionable end users may be more reluctant to acquire systems through legitimate channels.

189

Second, the licensing process provides the government with an opportunity to review end-users brought to its attention. It forces the government to increase its knowledge of end users throughout the world. The government should improve its understanding of end users of concern so that it can make better decisions regarding those end users.

Finally, while covert acquisition of computers is easier today than in the past, users without legitimate access to vendor support are at a disadvantage. The more powerful the system, the greater the dependence on vendor support. Moreover, operational, or mission-critical systems are much more dependent on fast, reliable support. End users of concern pursuing these applications may be at a disadvantage even if the hardware itself is readily available.

Outstanding Issues and Concerns

Periodic Reviews. This study documents the state of HPC technologies and applications during 1997-early 1998, and makes some conservative predictions of trends in the next 2-5 years. The pace of change in this industry continues unabated. The future viability of the export control policy will depend on its keeping abreast of change and adapting in an appropriate and timely manner. When based on accurate, timely data and an open analytic framework, policy revisions become much sounder, verifiable, and defensible. There is no substitute for periodic reviews and modification of the policy. While annual reviews may not be feasible given policy review cycles, the policy should be reviewed every two years at the most.

The use of end-user attainable performance in licensing. The use of end-user attainable performance in licensing decisions is a departure from past practice. It is a more conservative approach to licensing in that it assumes a worst-case scenario, that end-users will increase the performance of a configuration they obtain to the extent they can. By the same token, however, it reduces or eliminates a very problematic element of export control enforcement: ensuring that end-users do not increase their configurations beyond the level for which the license had been granted. If U.S. policy makers do not adopt the use of end-user attainable performance, then the burden of ensuring post-shipment compliance will remain on the shoulders of HPC vendors and U.S. government enforcement bodies. If they do, then post-shipment upgrades without the knowledge of U.S. vendors or the U.S. Government should not be a concern, having been taken into account when the license was granted.

Applications of national security importance. The current study has surveyed a substantial number of applications of national security importance to determine whether or not there are applications that can and should be protected using export controls of high performance computing. While the study has enumerated a number of applications that may be protected, it has not answered the question of which applications are of greatest national security importance and should be protected. This question can only be answered by the national security community, and it is important that it be answered. If an application area lacks a constituency willing to defend it the public arena, it is difficult to argue that it should be a factor in setting export control policy.

During the Cold War, when the world's superpowers were engaged in an extensive arms race and building competing spheres of influence, it was relatively easy to make the argument that certain

190

applications relying on high performance computing were critical to the nation s security. Because of changes in the geopolitical landscape, the nature of threats to U.S. national security, and the HPC technologies and markets, the argument appears to be more difficult to make today than in the past We have found few voices in the applications community who feel that export control on HPC hardware is vital to the protection of their application. Constituencies for the nuclear and cryptographic applications exist, although they are not unanimous in their support of the policy . An absence of constituencies in other application areas who strongly support HPC hardware export controls may reflect an erosion of the basic premises underlying the policy. If this is the case, it should be taken into account; where such constituencies exist, they should enter into the discussion.

References

[1] Goodman, S. E., P. Wolcott, and G. Burkhart, Building on the Basics: An Examination of High-Performance Computing Export Control Policy in the 1990s, Center for International Security and Arms Control, Stanford University, Stanford, CA, 1995.

[2] Goodman, S., P. Wolcott, and G. Burkhart, Executive Briefing:

An Examination of High-Performance Computing Export Control Policy in the

1990s, IEEE Computer Society Press, Los Alamitos, 1996.